Microsoft 365 Copilot: Protect and govern your sensitive data with Microsoft Purview

Table of contents

Dive deeper into Microsoft 365 Copilot beyond its flashy features. Microsoft MVP Jasper Oosterveld covers challenges and tools for understanding your data and safeguarding sensitive information.

Since ChatGPT hit the scene, the world of generative AI has skyrocketed. In under two months, more than 100 million hopped aboard. And hot on its heels, Microsoft rolled out Copilot in November 2023 to boost employee productivity.

In a nutshell, Copilot helps teams find, create, and share internal information.

But with great power comes great responsibility, especially when it comes to keeping your data safe. So, I want to shift the focus from the glitz of the features you’d find in Copilot to a crucial aspect: safeguarding your organization from potential data breaches while using it.

Tackling the challenges of Microsoft 365 Copilot

Have you done a quick search for data breaches? It's like opening Pandora's box of cybersecurity nightmares. Each breach not only stains an organization's reputation but also shakes the trust of their customers or users. And in today's connected world, losing trust can mean losing business.

Don’t get me wrong, Copilot has enormous potential and great features, but it does come with its fair share of challenges. One big one is making sure sensitive information doesn’t end up in the wrong hands.

Tools for understanding your data

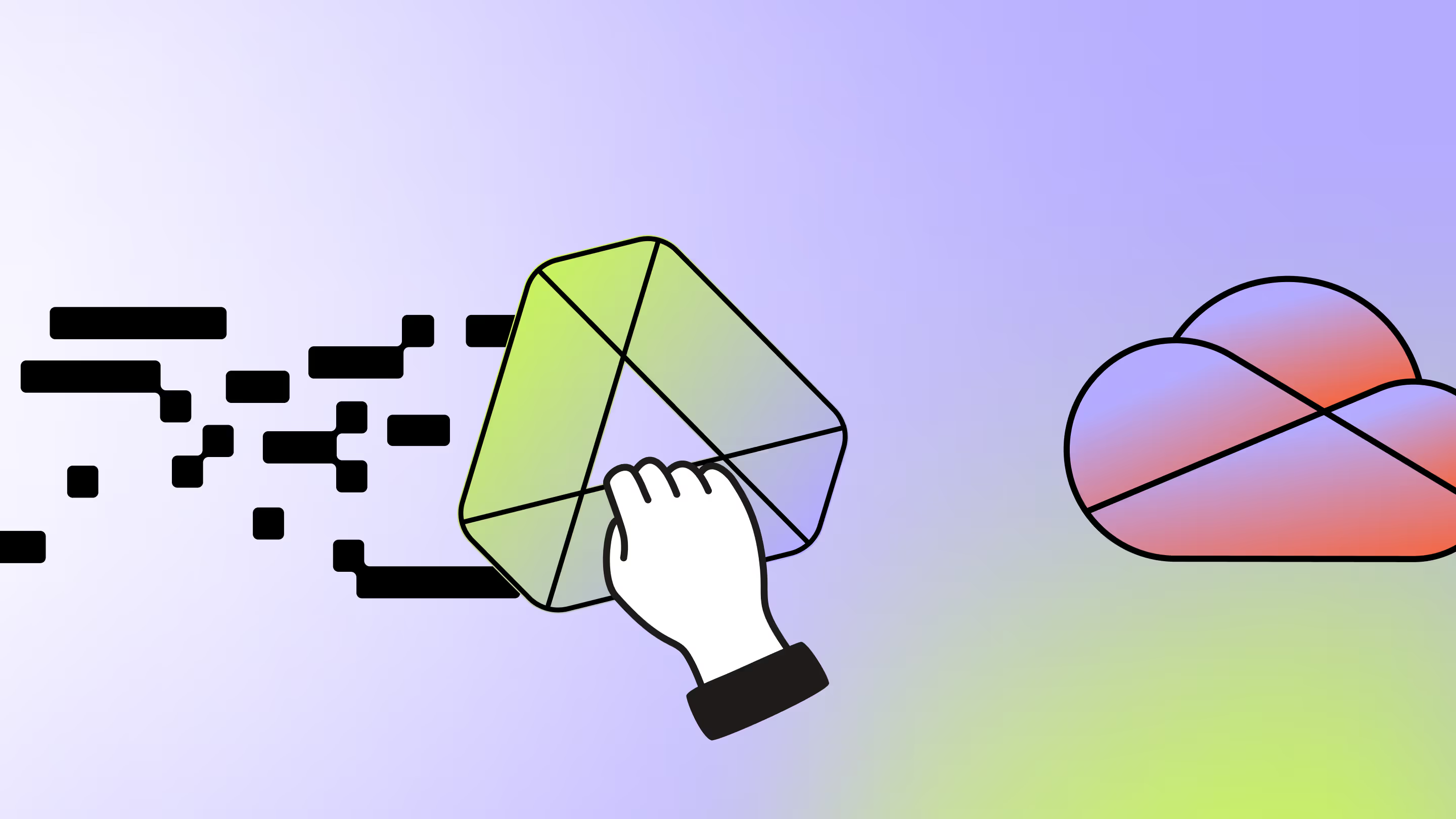

To recap, Copilot helps employees find, process, and share information. These actions take place not only within Microsoft 365 but also outside of it, like when copying and pasting sensitive information into ChatGPT or Google Gemini. So, it’s crucial to understand how your sensitive information is stored and used with Copilot and external AI services.

The following Microsoft tools help to understand your data.

Data security posture management for AI

Microsoft offers data security posture management for AI in the Microsoft Purview admin center.

Within it, you can see how Copilot and any external AI services are being used. It gives you a detailed overview of activities involving your sensitive information.

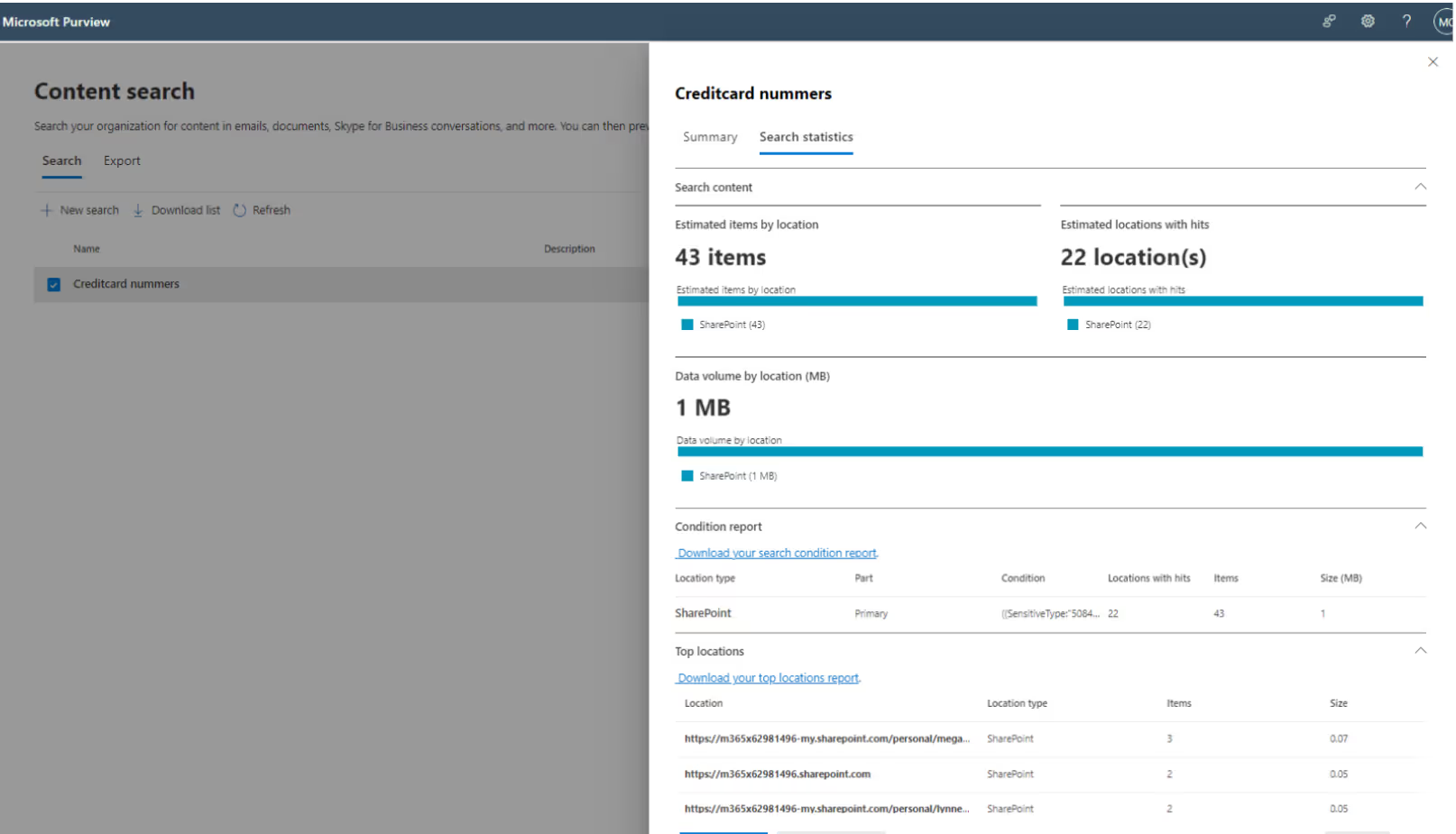

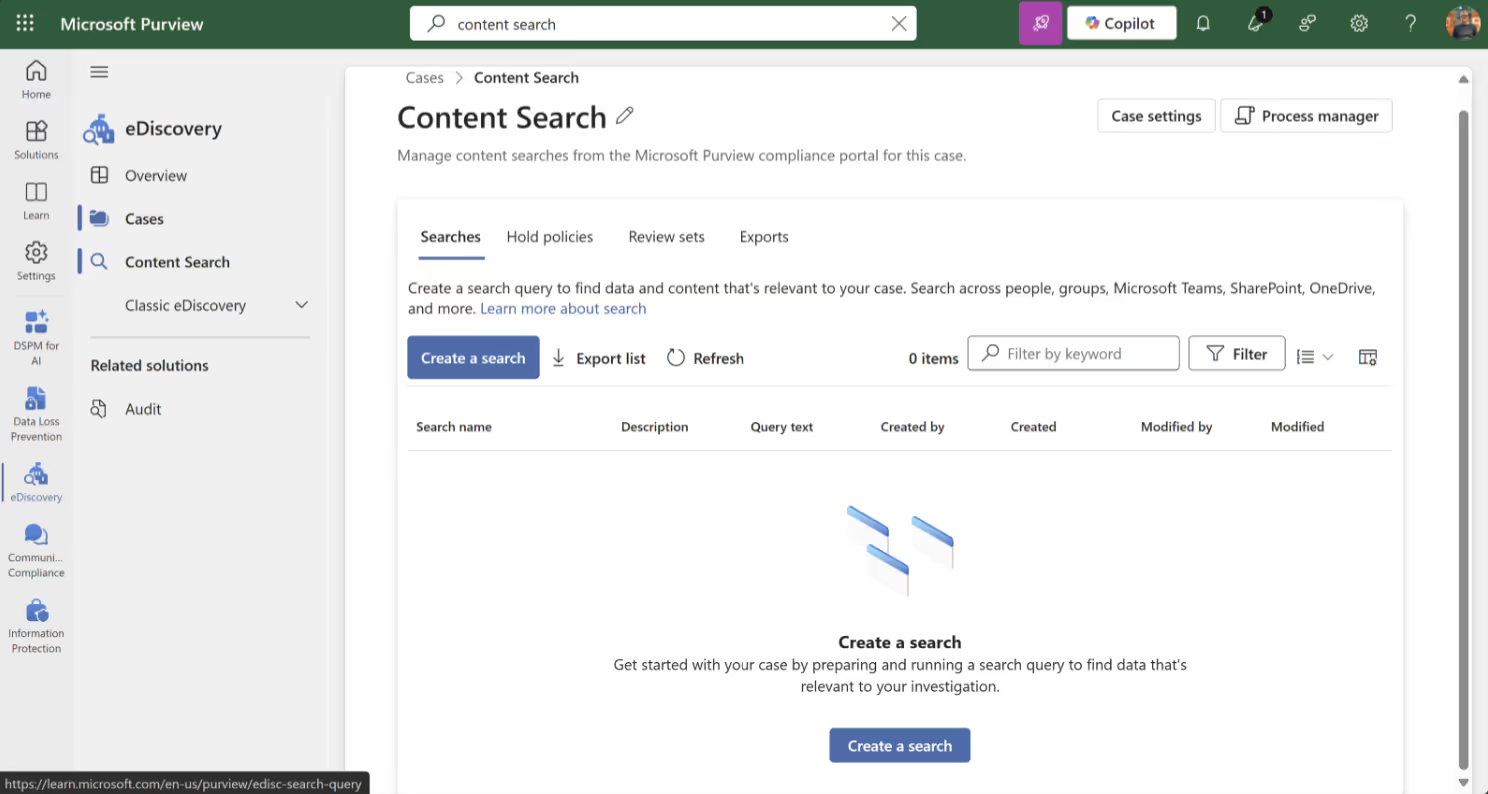

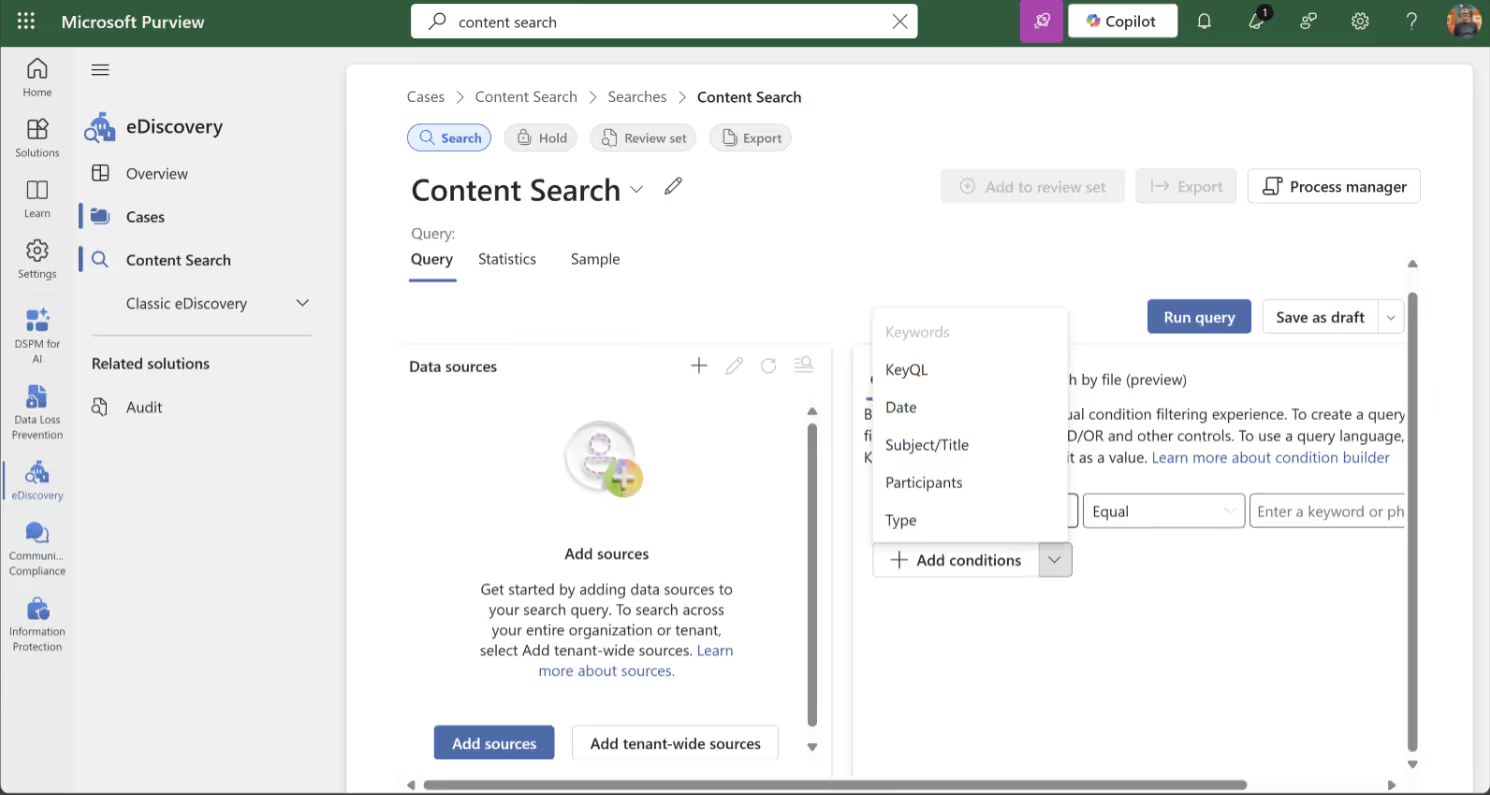

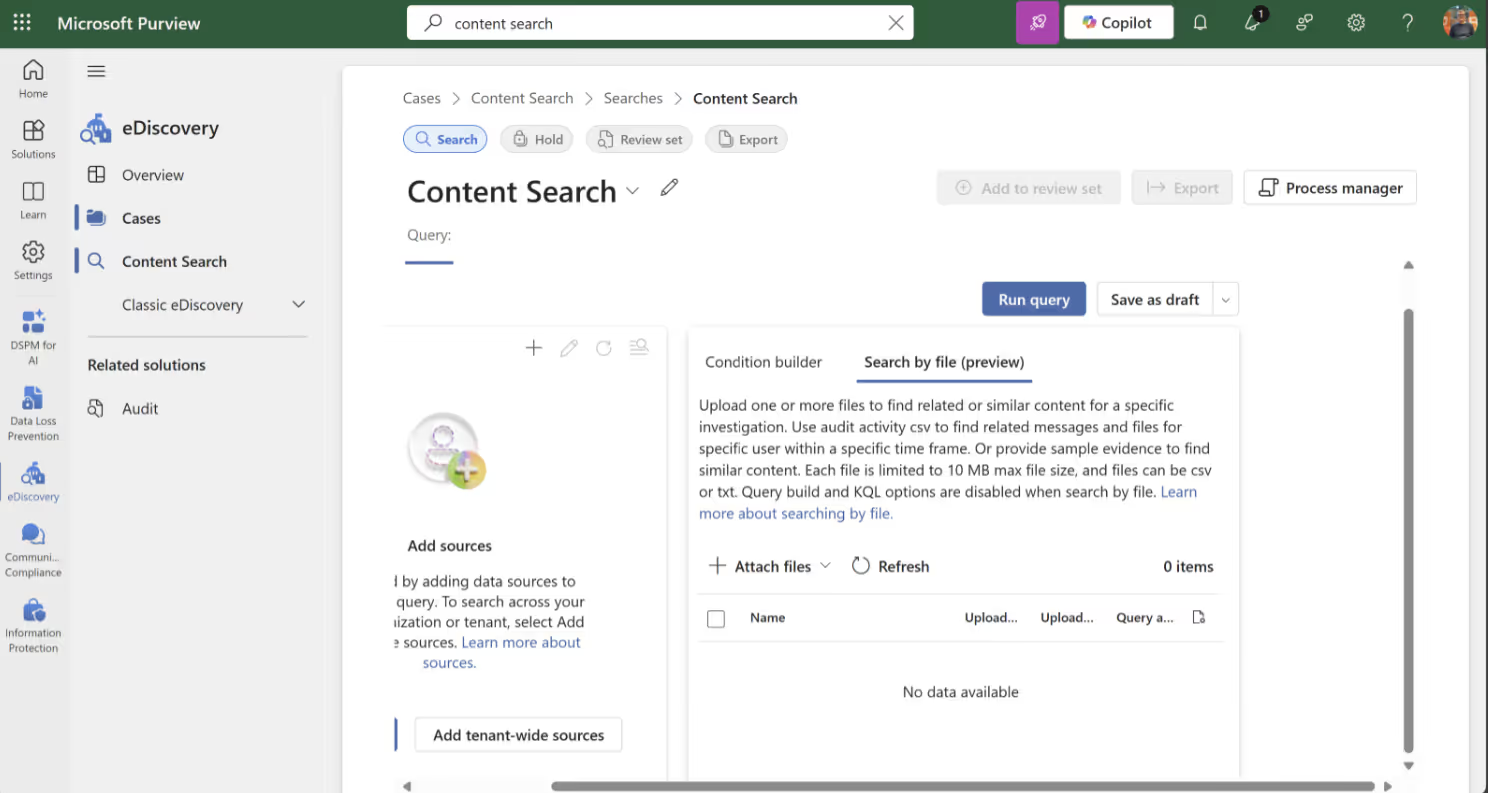

Content Search

If you’re curious about where your sensitive information is stored in SharePoint, OneDrive or Exchange, you can use Content Search.

With these insights, you can target actions like securing, moving or deleting sensitive information from places you don’t want it. However, Content Search will soon be deprecated. The new experience can be seen below:

Actually, it’s easier to see the exact content of that sensitive information with the Activity Explorer.

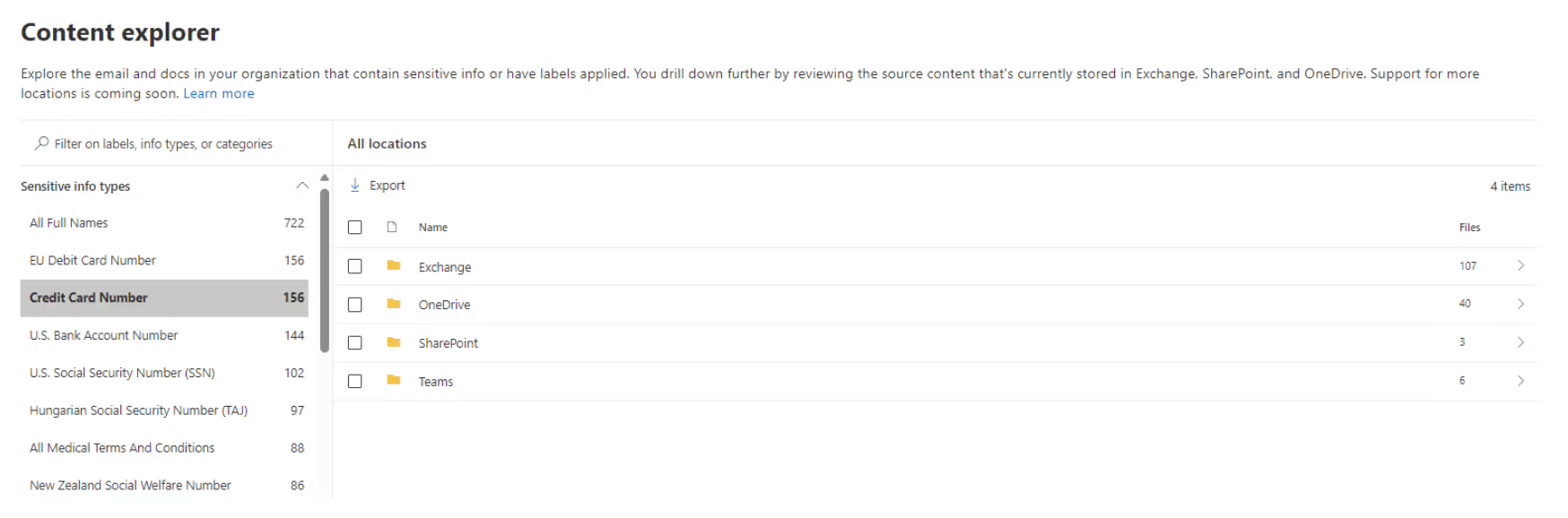

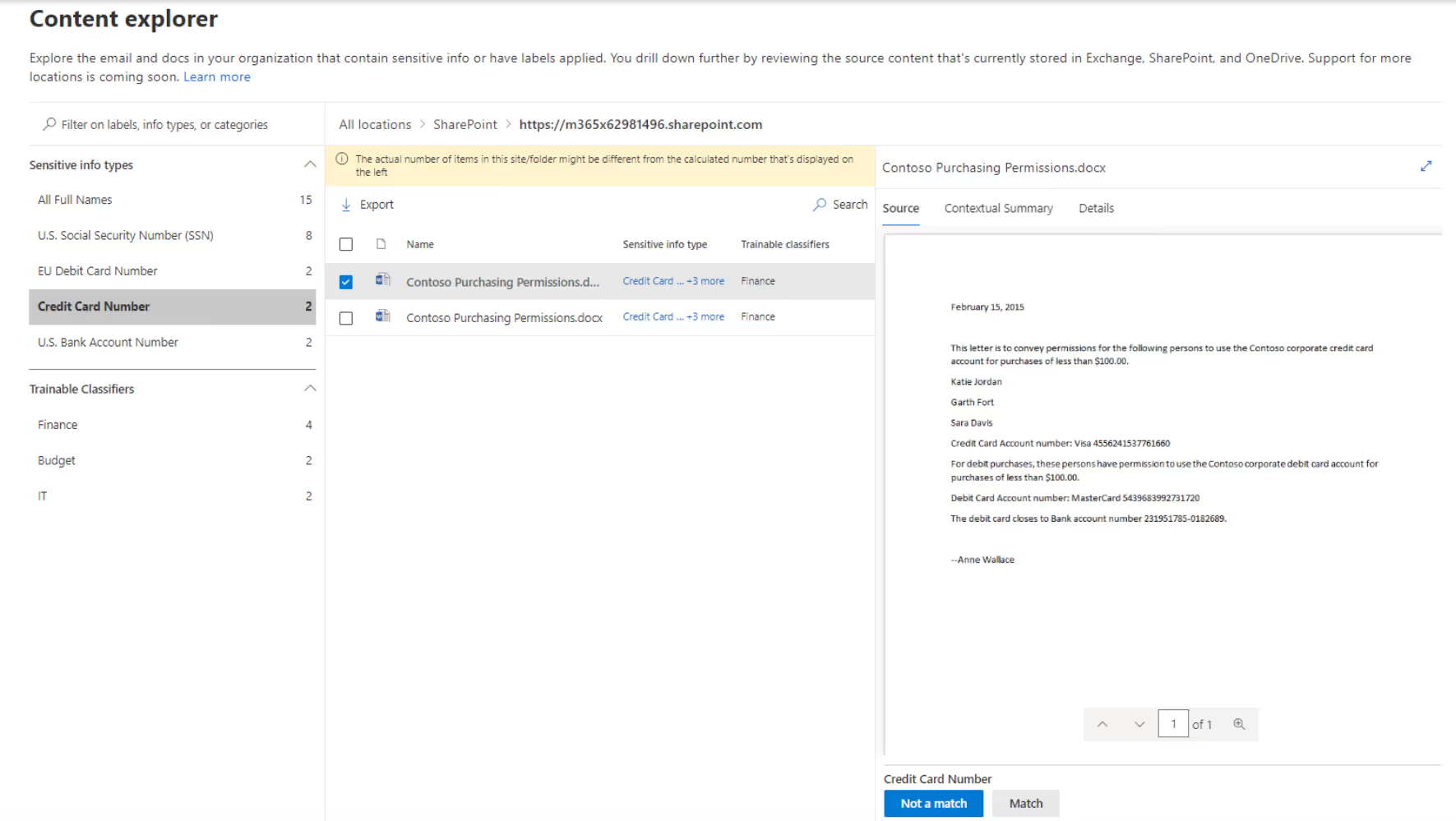

Activity Explorer

The capabilities of the Activity Explorer are similar to Content Search. It gives your organization insight into the locations where sensitive information is stored:

From this view, with the appropriate permissions, you can control the content:

Tools to help protect sensitive information

Keeping sensitive information safe has always been important, but with Copilot, it's become even more vital. As employees navigate through data more easily, the risk of exposure increases.

Microsoft Purview Information Protection (MIP)

MIP helps you classify and safeguard sensitive information using labels. Here’s what you need to know:

- You can apply labels manually or automatically.

- Automatic labeling can link a label to specific sensitive information for added security.

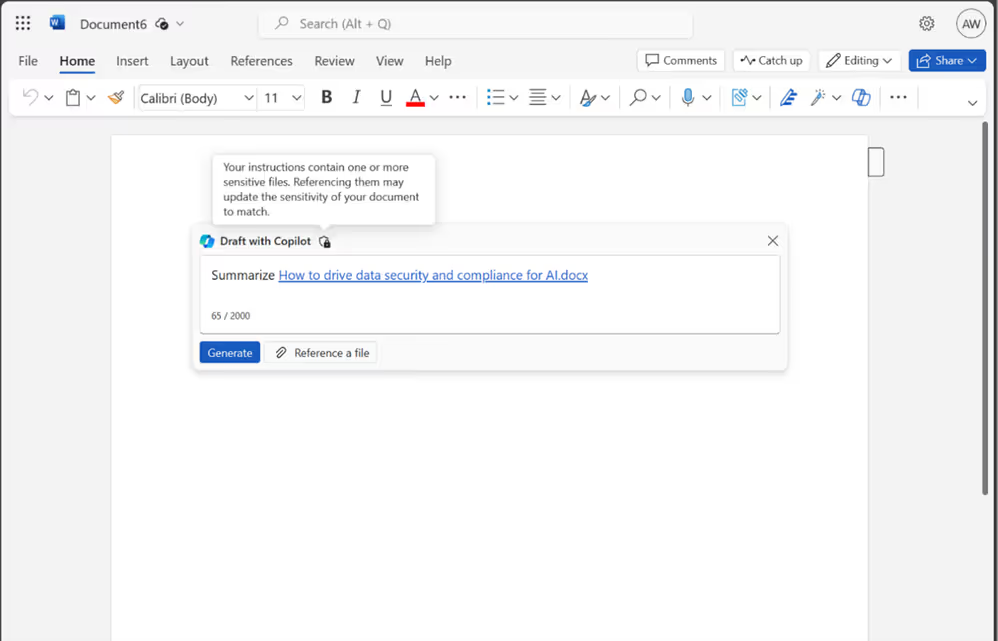

- Integration with Copilot is smooth sailing.

- Employees receive notifications when sensitive information is labeled, ensuring prompt action.

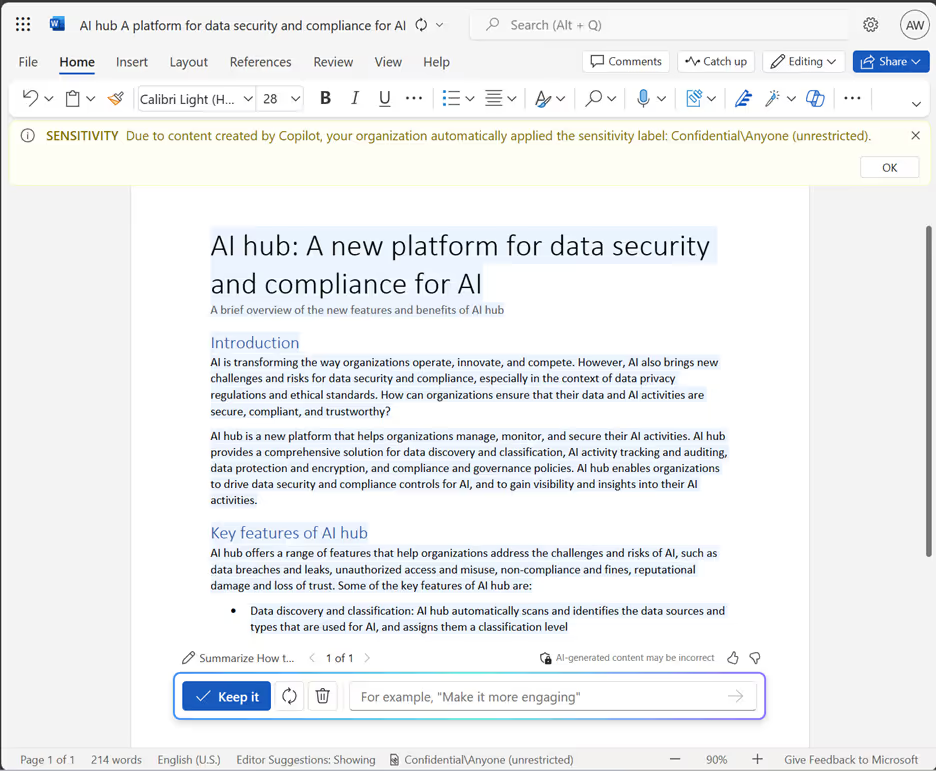

If your organization opts for automatic labeling, which I highly recommend, then a label will be applied automatically:

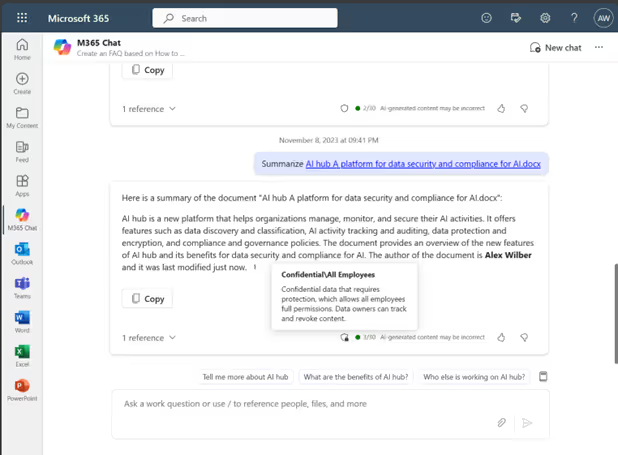

Labeling serves as a proactive measure to prevent potential data breaches. Plus, you can always find a reference to a document in a labeled prompt:

Microsoft Data Loss Prevention (DLP)

DLP can monitor how sensitive information is handled, like sharing it with external people or copying it into external applications or services such as ChatGPT. From this monitoring, you can apply a notification or protection. The following example shows how sensitive information is protected from being copied in an external AI service (Google Gemini):

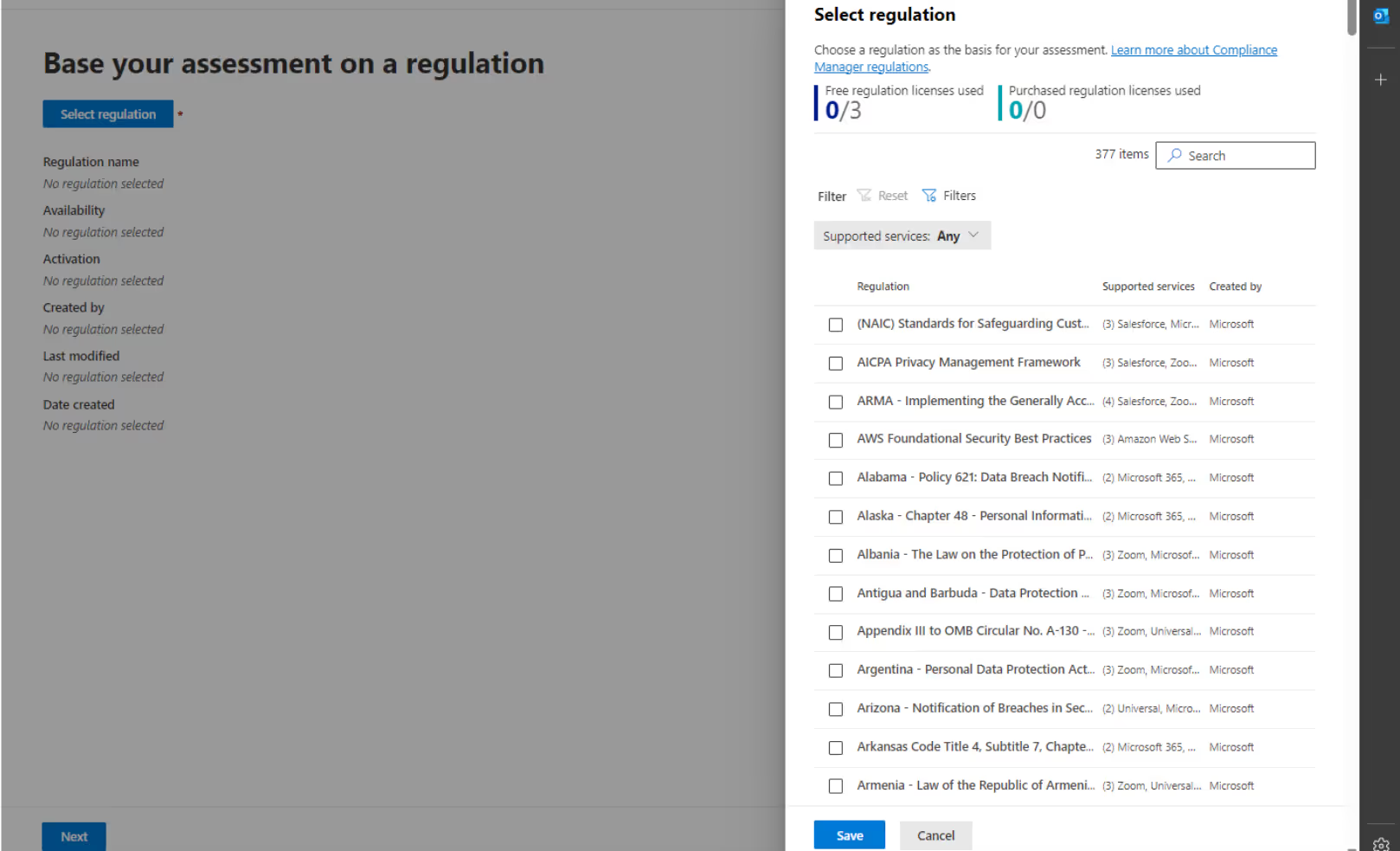

Microsoft Purview Compliance Manager

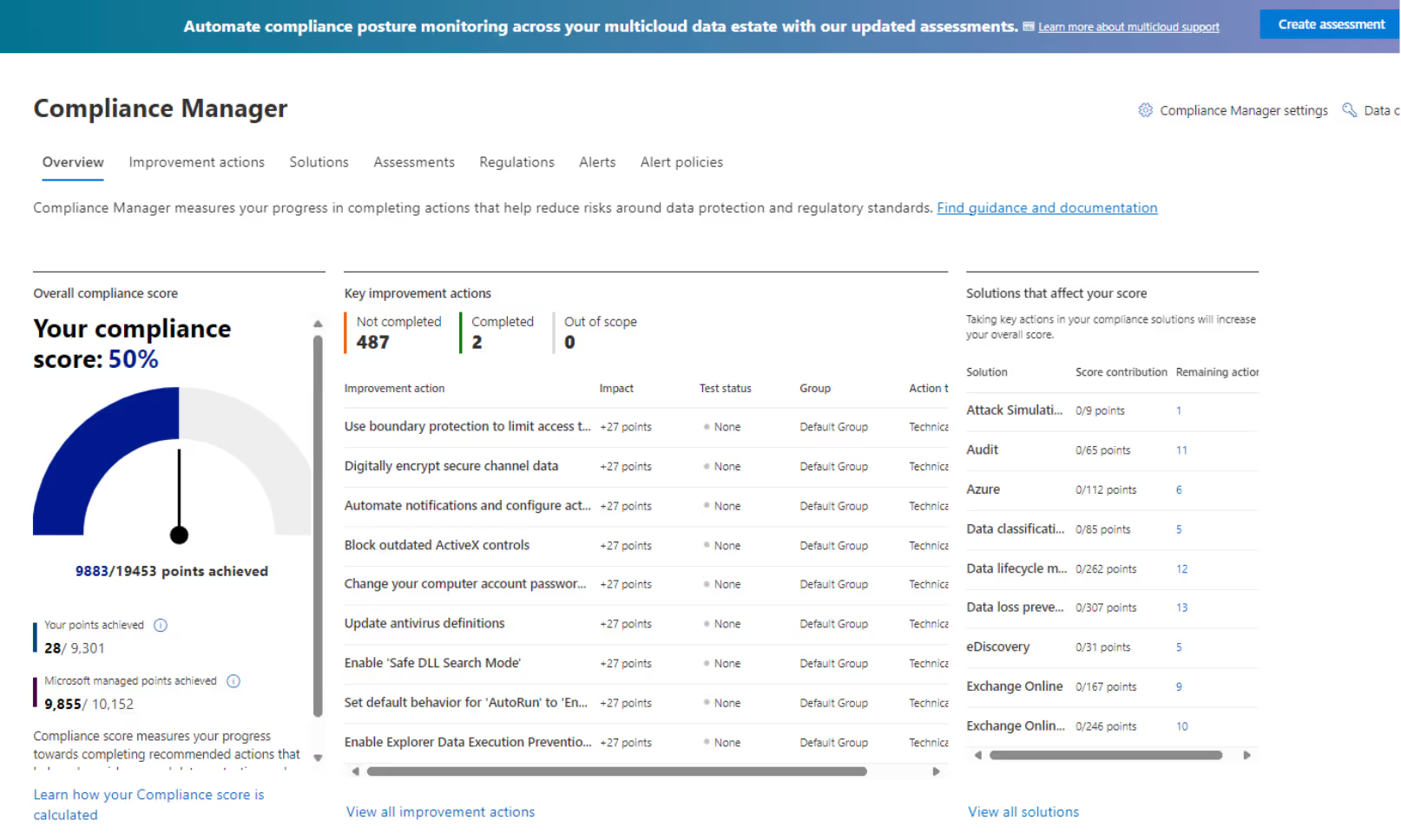

Every organization has to comply with rules and regulations, which can be pretty complex and tricky to keep up with. Adding Copilot into the mix can potentially increase the risk of data breaches.

Compliance Manager in Microsoft Purview can help with your organization’s compliance efforts. It provides a score for your Microsoft 365 tenant’s compliance status.

The score is based on Microsoft's Data Protection Baseline, which includes important rules and standards for keeping data safe and managing it properly. This baseline pulls from NIST CSF, ISO, FedRAMP, and GDPR. You can also include an extra assessment based on your own laws or regulations.

Considerations for Microsoft 365 Copilot deployment

Given the developments and potential challenges associated with deploying Copilot, let's think about a few important things:

- Knowing your sensitive information: Does your organization have a clear understanding of the types of sensitive information it has and where this data is located?

- Data control: Do you have control over this sensitive data as it moves around, both inside and outside your organization?

- Data protection across environments: How do you keep this sensitive data safe in all places?

If you're not sure about any of these, it's a good idea to consider using Microsoft Purview for data security.

So, while Copilot takes productivity to new heights, don't forget to buckle up for the data security ride.

.svg)

%20(1).avif)

.avif)

.avif)