The hidden risks of ignoring Microsoft Purview for protecting sensitive data in Microsoft 365

Table of contents

Microsoft MVP Jasper Oosterveld breaks down the hidden risks organizations face when they overlook Microsoft Purview—and how poor data protection can turn everyday collaboration into a security threat.

I see it all the time: people oversharing sensitive data, pasting it into generative AI tools, or holding onto it long past its relevance. That’s just how we work now in our AI-fueled digital world.

And yet, no alarm bells. No urgency.

Too many organizations still assume that enabling multi-factor authentication (MFA), configuring Conditional Access, and setting SharePoint permissions is enough to protect their sensitive data.

It’s not.

In this article, I’ll walk you through why that assumption can be problematic and what can happen when organizations ignore Microsoft Purview. You’ll see how oversharing, shadow AI, and poor governance quietly put sensitive information at risk, and why protecting your Microsoft 365 data with Purview is critical.

How secure is your Microsoft 365 data?

Go ahead, check for yourself.

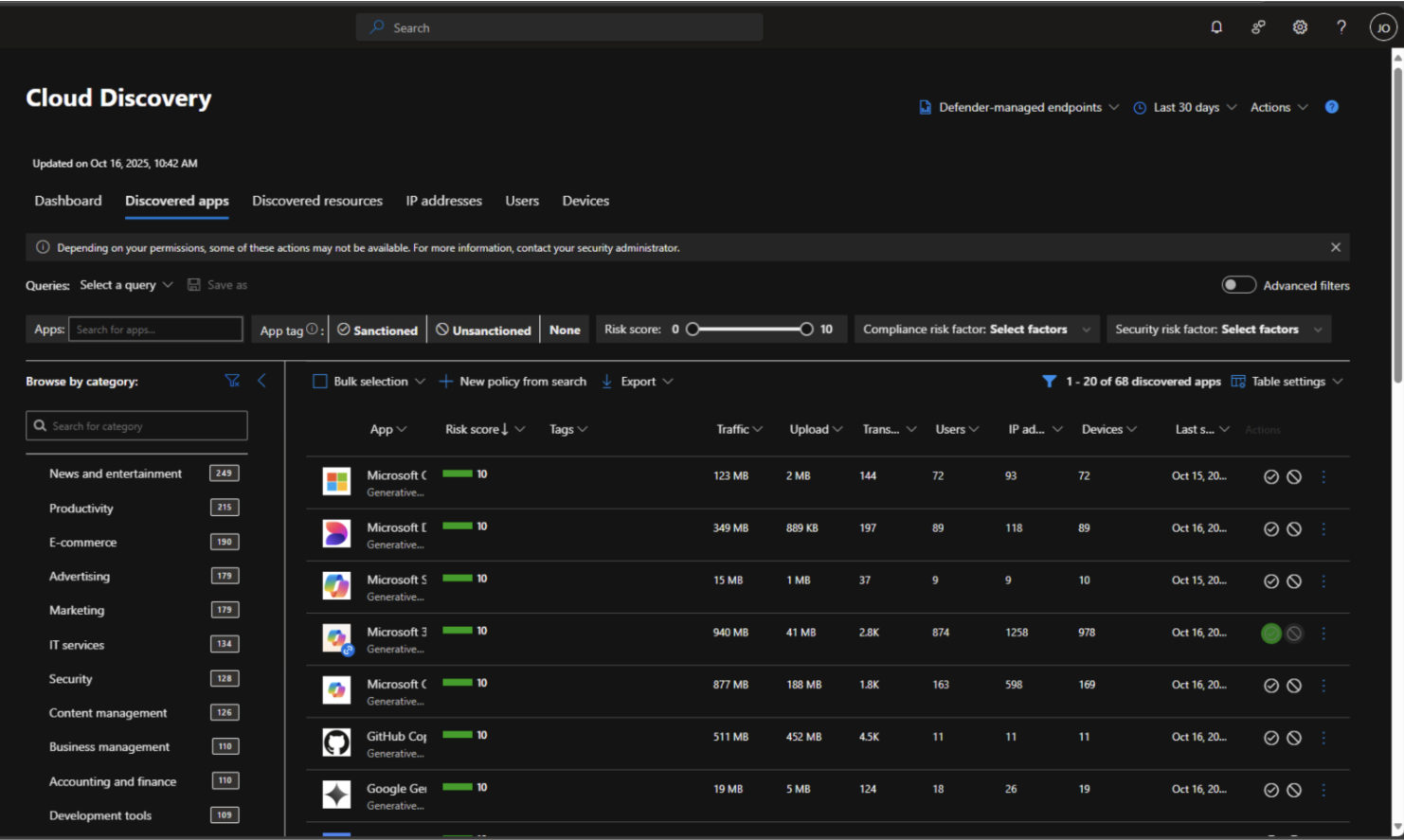

Open Microsoft Defender for Cloud Apps. Go to “App Discovery.” Then search for generative AI tools. You’ll probably find them. Dozens of them.

Shadow AI is a growing concern. Employees are turning to unauthorized apps behind IT’s back, and many organizations are losing visibility and control over their sensitive data, especially when it comes to the use (and misuse) of generative AI.

ShareGate’s recent State of Microsoft 365 report shows 82% of organizations are deploying Copilot with governance gaps still unresolved.

And Microsoft isn’t sugarcoating it: 91% of security and data leaders are not prepared for the risks AI brings.

Now layer Microsoft 365 Copilot on top of that—pulling content from SharePoint, OneDrive, and Teams. If you’re not actively protecting your data with tools like Microsoft Purview, you’re leaving the door wide open.

The modern M365 workplace is a data risk minefield

We live in an oversharing culture. Just scroll through social media to see how much we give away. That same behavior has crept into the workplace. Most people don’t stop to consider whether the person on the receiving end should even see the data they’re sharing.

The same goes for generative AI tools and Microsoft Copilot. To most employees, those tools are helpful assistants, but it's often how they use them that could put their data at risk. Employees copy and paste sensitive data into prompts and freely share the results with colleagues—or worse, external contacts.

But oversharing isn’t our only issue. We also store everything forever. Take a look at your own OneDrive or SharePoint sites. Do you really need that folder from a project ten years ago? Probably not.

According to ShareGate's State of Microsoft 365 report, data growth and Copilot content creation are overwhelming current governance structures—and most teams still don’t have a plan in place.

AI raises new challenges

Microsoft 365 Copilot can access and surface any content a user has permission to view across Microsoft 365—including SharePoint, OneDrive, Teams, Exchange, and other connected sources. That means even outdated or inaccurate data can appear in its responses. And because most users tend to take AI-generated outputs at face value, trusting them as fact, the risk multiplies.

Imagine an AI-generated recommendation based on obsolete customer data. That’s a disaster waiting to happen.

Shadow AI is already here

Shadow AI is when employees use AI tools without the company’s knowledge or approval.

That’s shadow AI: well-meaning productivity, but with hidden data exposure risks. Those files are processed and stored by an external AI tool outside the organization’s secure Microsoft 365 environment.

For years, I’ve seen organizations overlook or struggle with data security and Microsoft Purview implementation.

So why is it that, despite the awareness, so many organizations still fall behind when it comes to securing their data? Let’s explore that question in the next section.

Why Microsoft Purview implementations are lagging

After reviewing recent data security reports, one thing is clear: awareness of the risks is high, yet action often falls short. Organizations know their sensitive information is at risk, especially with the rise of AI tools in the workplace, but many still struggle to translate that awareness into effective protection.

So, the million-dollar question remains: Why aren’t Microsoft Purview projects taking off—or delivering the expected results?

Lack of urgency and “pain”

For most employees, data security simply isn’t top of mind. They’re focused on their day-to-day work and just want to get things done. The “pain” of neglecting security tasks—like labeling data or deleting files according to retention policies—often isn’t felt directly. As long as these actions don’t disrupt their workflow, there’s little incentive to adopt or engage with Microsoft Purview.

According to Microsoft’s security report, more than 30% of decision-makers say they don’t know where or what their business-critical data is.

Nobody taking ownership

In most organizations, data security responsibility is spread across multiple roles—CISOs, security officers, Microsoft 365 platform owners, risk officers, and more. Too often, these stakeholders point fingers instead of collaborating. The result? No one truly takes ownership, and the organization’s overall data security posture suffers.

Employee concerns

It’s also understandable that employees have questions and reservations about Microsoft Purview’s impact on their work. These concerns usually center around sensitivity labels with encryption, restrictive Data Loss Prevention (DLP) policies, and retention labels tied to records management. Without clear communication and guidance, these tools can feel intrusive or overly restrictive.

Where to begin?

Data security is a complex topic and Microsoft Purview doesn’t make it any easier. At the time of writing, it includes 15 different solutions, leaving many organizations wondering: Where do we even start? Unfortunately, that uncertainty often leads to inaction.

Missing expertise and manpower

Organizations with data security teams are understaffed and unable to manage critical responsibilities effectively. This aligns with feedback I’ve heard from customers over the years. Security requires constant attention, but limited resources and manpower often get allocated elsewhere, leaving data security and Microsoft Purview on the back burner.

What’s at risk when you ignore Microsoft Purview

There are serious consequences by ignoring or postponing the protection of your sensitive data with Microsoft Purview. The longer you wait, the greater the risk to your organization.

Data leaks happen, whether you realize it or not

Data leaks can happen in any organization. Sometimes by mistake, sometimes through oversight. Regardless, the impact can be significant. Even a small breach can erode customer trust and create compliance challenges.

83% of organizations experience more than one data breach in their lifetime.

While it’s impossible to eliminate every risk, implementing the right controls helps you minimize exposure and respond effectively.

Unprotected data leads to oversharing

Without Microsoft Purview, sensitive data can easily end up in the wrong hands or be processed by Microsoft 365 Copilot without proper safeguards. This creates the risk of oversharing confidential information or generating content based on outdated or inaccurate data.

Uncontrolled AI can expose your data

When employees paste sensitive company information into external AI tools, that data may be stored, used to train models, or accessed by third parties—depending on the service’s policies.

Once shared, you lose control over where your data goes and how it’s used.

Good to know

Microsoft’s AI solutions include strict privacy protections to ensure your data stays within your organization’s control.

Here are some key points in Microsoft’s official privacy FAQ for Microsoft Copilot:

- Data control: Users can opt out of having their conversations used to train AI models or personalize results.

- Data retention: Copilot conversations are saved for 18 months by default but can be deleted anytime.

- File handling: Uploaded files are securely stored for up to 30 days and never used to train Copilot models.

You can’t protect what you can’t see

Sensitive information is scattered across Microsoft 365 and beyond. Without Microsoft Purview, you have little visibility into where that data lives, making it nearly impossible to manage or secure. You can’t govern what you can’t find. That’s why having clarity and control of your Microsoft 365 environment makes all the difference.

In the next article, I show you how you can get started with Microsoft Purview with practical steps and demos, to help you secure your organization’s data.

.svg)

%20(1).avif)

.jpg)

.jpg)